In the evolving landscape of social media, a significant yet unrecognized workforce is dedicated to maintaining online safety - the content moderators. These individuals are responsible for reviewing distressing and often illegal content that pervades platforms like TikTok, Instagram, and Facebook. This hidden world of moderation involves dealing with graphic materials including beheadings, mass killings, and child abuse, leading to severe mental health repercussions among moderators.

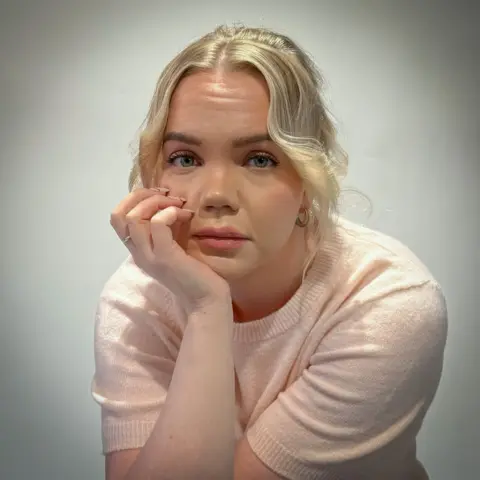

Recent studies, including a series by the BBC, reveal the horrific experiences faced by these professionals, who work around the globe, often employed by third-party companies. Many moderators have left the industry due to the adverse impact on their well-being. As Mojez, a former TikTok content moderator in East Africa, notes, his role involved reviewing hundreds of traumatizing videos to ensure a safer user experience. This responsibility, however, came at a heavy cost to his mental health.

The issue of moderator mental health has sparked legal actions, with former employees claiming that their jobs have led to lasting psychological harm. Notably, in 2020, Meta, previously recognized as Facebook, agreed to a $52 million settlement for mental health issues arising from moderation work. Critics label moderators as "keepers of souls" due to their exposure to the final moments of individuals’ lives captured on videos.

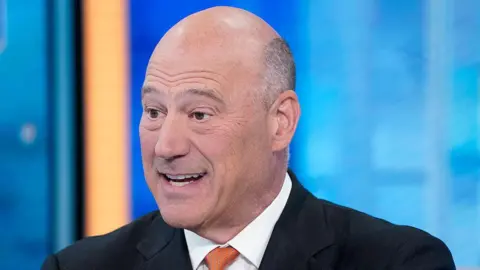

Despite the grim realities of the job, moderators express a sense of pride in their roles and their service to the public. Some even draw parallels between their work and that of emergency responders, underscoring the essential function they play in online safety. However, there’s a lingering concern about the rise of automated moderation tools powered by AI. Innovators, like Dave Willner, note that AI can offer a bright future for content moderation; capable of analyzing content without fatigue or emotional distress, technology may enhance the current limitations of human moderators.

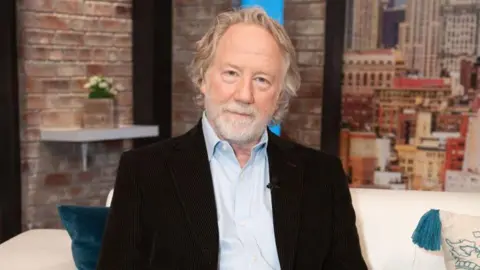

Yet, experts warn that AI is not a panacea. Dr. Paul Reilly emphasizes the potential dangers of relying too heavily on automated systems, highlighting issues like over-blocking free speech and missing nuanced content. The need for human insight in moderation remains critical, underscoring a systemic problem in the industry: a shortage of moderators and a demand for an emotionally taxing job.

Tech companies, including TikTok and OpenAI, acknowledge the inherent difficulties of content moderation, and emphasize support measures they implement for their moderators. TikTok claims to promote a supportive environment, while OpenAI expresses gratitude for the challenging work done by human moderators in training AI systems to identify harmful content effectively.

As public awareness grows regarding the sacrifices made by social media moderators, there is a critical conversation emerging about ethical practices in moderation, technology's role, and the need for better support systems in the industry. The ongoing series by the BBC, "The Moderators," seeks to shed light on these essential workers and their struggles, urging a reconsideration of how we engage with digital platforms.

Recent studies, including a series by the BBC, reveal the horrific experiences faced by these professionals, who work around the globe, often employed by third-party companies. Many moderators have left the industry due to the adverse impact on their well-being. As Mojez, a former TikTok content moderator in East Africa, notes, his role involved reviewing hundreds of traumatizing videos to ensure a safer user experience. This responsibility, however, came at a heavy cost to his mental health.

The issue of moderator mental health has sparked legal actions, with former employees claiming that their jobs have led to lasting psychological harm. Notably, in 2020, Meta, previously recognized as Facebook, agreed to a $52 million settlement for mental health issues arising from moderation work. Critics label moderators as "keepers of souls" due to their exposure to the final moments of individuals’ lives captured on videos.

Despite the grim realities of the job, moderators express a sense of pride in their roles and their service to the public. Some even draw parallels between their work and that of emergency responders, underscoring the essential function they play in online safety. However, there’s a lingering concern about the rise of automated moderation tools powered by AI. Innovators, like Dave Willner, note that AI can offer a bright future for content moderation; capable of analyzing content without fatigue or emotional distress, technology may enhance the current limitations of human moderators.

Yet, experts warn that AI is not a panacea. Dr. Paul Reilly emphasizes the potential dangers of relying too heavily on automated systems, highlighting issues like over-blocking free speech and missing nuanced content. The need for human insight in moderation remains critical, underscoring a systemic problem in the industry: a shortage of moderators and a demand for an emotionally taxing job.

Tech companies, including TikTok and OpenAI, acknowledge the inherent difficulties of content moderation, and emphasize support measures they implement for their moderators. TikTok claims to promote a supportive environment, while OpenAI expresses gratitude for the challenging work done by human moderators in training AI systems to identify harmful content effectively.

As public awareness grows regarding the sacrifices made by social media moderators, there is a critical conversation emerging about ethical practices in moderation, technology's role, and the need for better support systems in the industry. The ongoing series by the BBC, "The Moderators," seeks to shed light on these essential workers and their struggles, urging a reconsideration of how we engage with digital platforms.