In a striking revelation, more than 80% of children in Australia aged 12 or younger engaged with social media or messaging services last year, contravening the existing age requirement of 13 for most platforms. The findings emerged from a report by Australia's internet regulator eSafety, which highlighted YouTube, TikTok, and Snapchat as the most frequented platforms among young users. The surge in underage social media access has prompted Australia to consider implementing a social media ban for individuals under the age of 16, expected to take effect by the end of this year.

The report scrutinized popular platforms including Discord, Google (YouTube), Meta (Facebook and Instagram), Reddit, Snap, TikTok, and Twitch, although the companies did not respond to media inquiries regarding the findings. While these platforms generally enforce a minimum age of 13 for account creation, exceptions exist. For instance, YouTube offers a Family Link feature allowing parental supervision for younger users and a separate app exclusively designed for children, YouTube Kids. Notably, the report excluded data on YouTube Kids, focusing instead on the primary platforms where younger users are active.

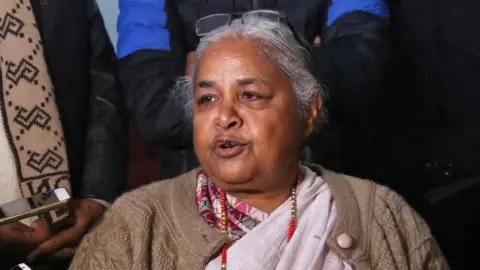

eSafety commissioner Julie Inman Grant emphasized the report's importance in guiding future actions, stressing that online safety for children is a collective responsibility. This includes a multi-faceted approach involving social media companies, manufacturers of devices and applications, parents, educators, and political representatives.

The research involved over 1,500 children between the ages of eight and 12, revealing that 84% had utilized at least one social media or messaging service since the year began. More than half of these children accessed platforms using accounts registered by parents or guardians, while a third had their own accounts. It was noted that 80% of those who had personal accounts received assistance from caregivers during the setup process. Alarmingly, only 13% of these accounts had been closed by the respective platforms due to users being underage.

The report highlights inconsistencies in the approaches taken by various social media companies regarding age verification methods during account registration. The authors noted that platforms generally lack robust strategies to effectively verify user ages at the critical point of account sign-up, allowing underage users to provide false information easily.

Additionally, the survey inquired about the mechanisms employed by these platforms to ascertain the ages of younger users. Companies such as Snapchat, TikTok, Twitch, and YouTube indicated that they utilize technology to identify potentially underage users during active use of their services, relying on user engagement to detect age discrepancies. However, this method may lead to a delayed response and possible exposure of children to various risks before accurate age assessments are made.

The report raises essential questions regarding the responsibility of social media platforms and the effectiveness of current regulations in safeguarding children from potential online harm in an increasingly digital world.