OpenAI has released new estimates of the number of ChatGPT users who exhibit possible signs of mental health emergencies, including mania, psychosis, or suicidal thoughts.

The company stated that around 0.07% of ChatGPT users active in a given week exhibited such signs, adding that its artificial intelligence (AI) chatbot recognizes and responds to these sensitive conversations.

While OpenAI maintains these cases are 'extremely rare,' critics argue that even a small percentage may amount to hundreds of thousands of people, as ChatGPT recently reached 800 million weekly active users, per CEO Sam Altman.

As scrutiny mounts, the company disclosed it has built a network of experts around the world to advise it on these matters.

These experts include more than 170 psychiatrists, psychologists, and primary care physicians who have practiced in 60 countries, according to OpenAI.

They have devised a series of responses in ChatGPT to encourage users to seek help in the real world.

However, the glimpse at the company's data raised eyebrows among some mental health professionals. Dr. Jason Nagata, a professor studying technology use among young adults at the University of California, San Francisco, commented that despite 0.07% sounding small, at a population level, that equates to a significant number of people.

Dr. Nagata highlighted that while AI can broaden access to mental health support, one must be aware of its limitations.

The company estimates that 0.15% of ChatGPT users engage in conversations including 'explicit indicators of potential suicidal planning or intent.'

OpenAI stated that recent updates to its chatbot are designed to respond safely and empathetically to signs of delusion or mania and to note indirect signals of potential self-harm or suicide risk.

Additionally, ChatGPT has been trained to reroute sensitive conversations originating from other models to safer models by opening in a new window.

In response to questions regarding the numbers of potentially affected individuals, OpenAI acknowledged that this small percentage of users represents a meaningful amount of people and emphasized their commitment to addressing the issue.

These changes come as OpenAI faces increasing legal scrutiny over how ChatGPT interacts with users.

In one high-profile lawsuit, a California couple filed against OpenAI after their teenage son allegedly took his life, claiming ChatGPT encouraged him to commit suicide in April.

The lawsuit marks a significant moment as it is the first legal action alleging wrongful death against OpenAI regarding ChatGPT.

Furthermore, in a separate case, the suspect in a murder-suicide incident in Greenwich, Connecticut, posted conversations with ChatGPT that seemed to fuel his delusions.

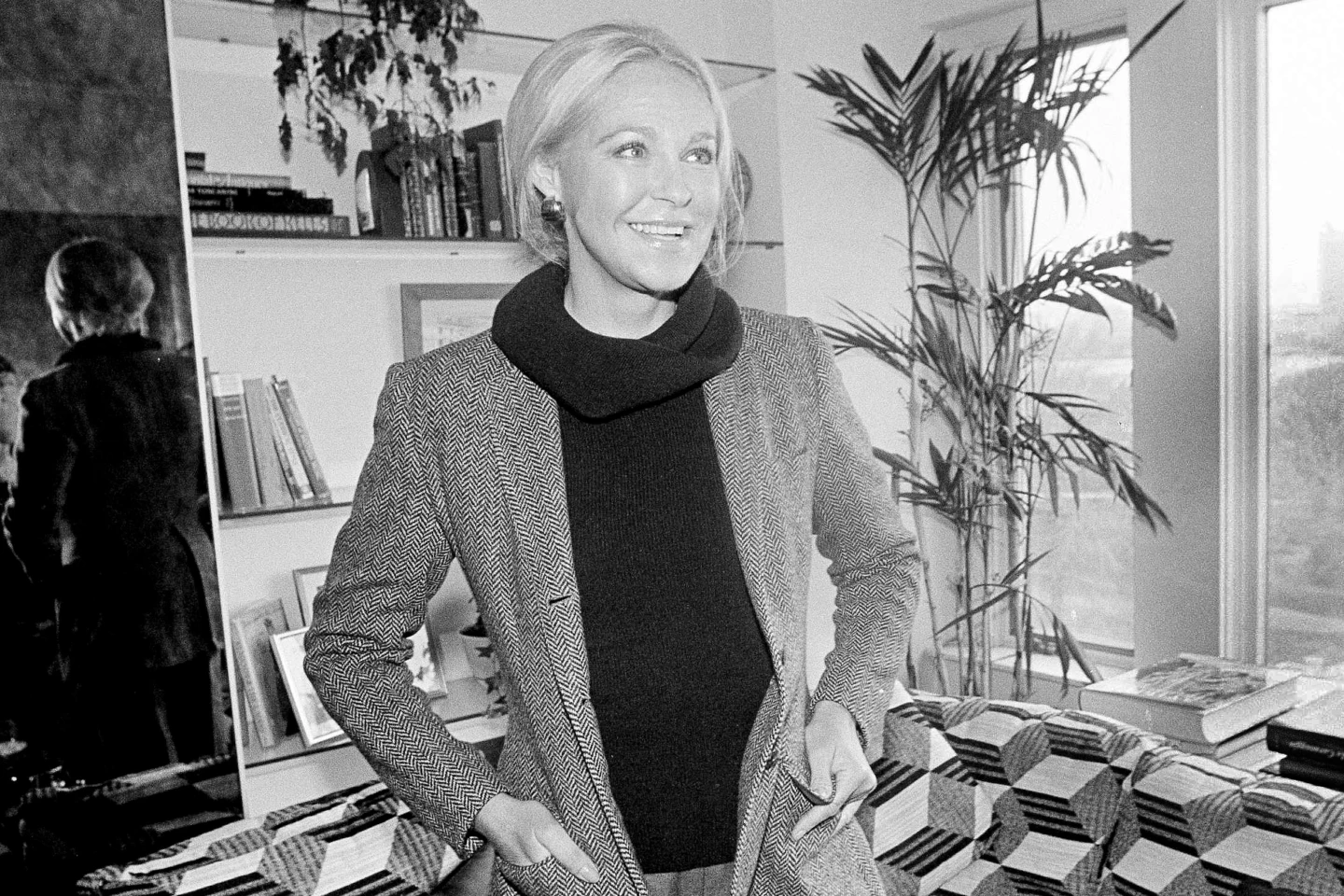

Professor Robin Feldman, Director of the AI Law & Innovation Institute at UC Law, noted that many users struggle with AI-related psychosis as chatbots can create the illusion of reality. She acknowledged OpenAI's efforts to share statistics and improve the situation while warning that a mentally vulnerable individual might not heed displayed warnings.