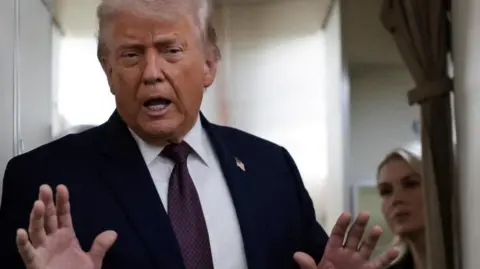

LOS ANGELES (AP) — The Trump administration has become increasingly notable for their integration of AI-generated creations on social media, opting for both cartoonish visuals and more realistic images, like the recently shared altered photograph of civil rights attorney Nekima Levy Armstrong, who was depicted in tears following her arrest. This image poses new questions around the boundaries between reality and fabrication in official government communications.

After Homeland Security Secretary Kristi Noem tweeted the original image from Levy Armstrong's arrest, the White House followed suit by sharing a manipulated version that portrayed her weeping, contributing to the broader discourse around AI-generated content in the political arena, especially post the fatal shootings by the Border Patrol in Minneapolis.

Critics, especially in the realm of misinformation, have expressed concern that such practices further erode public trust in the government. As AI-generated imagery proliferates on social media, doubts arise about the accuracy of visual information consumed by the public.

Amidst criticism, White House officials, including deputy communications director Kaelan Dorr, asserted that “memes will continue,” with Deputy Press Secretary Abigail Jackson also mocking the backlash. This directive came following significant scrutiny on the nature of the altered image.

David Rand, a professor at Cornell University, indicated that labeling the modified content as a meme downplays its gravitas compared to previous comic iterations released by the administration. He claimed that the implications of posting such a sensitive image could incite varied interpretations and reactions.

In an evolving digital landscape, where online memes often carry intricate meanings, this incident illustrates the tools being utilized to engage Trump's base, particularly those active on social platforms. Zach Henry, a Republican communications consultant, remarked that memes, seen primarily by those familiar with online culture, could mislead less savvy individuals, creating further discussions around the distortion of truth.

Experts like Michael A. Spikes have flagged how significant alterations serve to create narratives around politically charged subjects, ultimately complicating the public discourse around trust in federal information. He underlined the loss of confidence that results from sharing synthetic materials originating from authoritative channels.

As discussions of misinformation extend beyond this example into other spheres, concerns arise around the implications of fabrications shared through social media, particularly in relation to significant societal events. Experts predict a troubling trend arising from easily manipulated AI content, as noted by Ramesh Srinivasan from UCLA, who expressed worries about the wide-reaching absence of trust in accurate information sources. The current environment indicates a pressing need for enhanced media literacy and systems that authenticate media origins to help navigate these convoluted landscapes.

The dialogue on this issue continues, as the impact of AI-enhanced content becomes an increasingly critical focal point in debates about media credibility and public trust.